Journal of Machine Learning Research 13 (2012) 723-773 Submitted 4/08; Revised 11/11; Published 3/12

A Kernel Two-Sample Test

Arthur Gretton

∗

ARTHUR.GRETTON@GMAIL.COM

MPI for Intelligent Systems

Spemannstrasse 38

72076 T

¨

ubingen, Germany

Karsten M. Borgwardt

†

KARSTEN.BORGWARDT@TUEBINGEN.MPG.DE

Machine Learning and Computational Biology Research Group

Max Planck Institutes T

¨

ubingen

Spemannstrasse 38

72076 T

¨

ubingen, Germany

Malte J. Rasch

‡

MALTE@MAIL.BNU.EDU.CN

19 XinJieKouWai St.

State Key Laboratory of Cognitive Neuroscience and Learning,

Beijing Normal University,

Beijing, 100875, P.R. China

Bernhard Sch

¨

olkopf BERNHARD.SCHOELKOPF@TUEBINGEN.MPG.DE

MPI for Intelligent Systems

Spemannstrasse 38

72076, T

¨

ubingen, Germany

Alexander Smola

§

ALEX@SMOLA.ORG

Yahoo! Research

2821 Mission College Blvd

Santa Clara, CA 95054, USA

Editor: Nicolas Vayatis

Abstract

We propose a framework for analyzing and comparing distributions, which we use to construct sta-

tistical tests to determine if two samples are drawn from different distributions. Our test statistic is

the largest difference in expectations over functions in the unit ball of a reproducing kernel Hilbert

space (RKHS), and is called the maximum mean discrepancy (MMD). We present two distribution-

free tests based on large deviation bounds for the MMD, and a third test based on the asymptotic

distribution of this statistic. The MMD can be computed in quadratic time, although efficient linear

time approximations are available. Our statistic is an instance of an integral probability metric, and

various classical metrics on distributions are obtained when alternative function classes are used

in place of an RKHS. We apply our two-sample tests to a variety of problems, including attribute

matching for databases using the Hungarian marriage method, where they perform strongly. Ex-

cellent performance is also obtained when comparing distributions over graphs, for which these are

the first such tests.

∗. Also at Gatsby Computational Neuroscience Unit, CSML, 17 Queen Square, London WC1N 3AR, UK.

†. This work was carried out while K.M.B. was with the Ludwig-Maximilians-Universit

¨

at M

¨

unchen.

‡. This work was carried out while M.J.R. was with the Graz University of Technology.

§. Also at The Australian National University, Canberra, ACT 0200, Australia.

c

2012 Arthur Gretton, Karsten M. Borgwardt, Malte J. Rasch, Bernhard Sch

¨

olkopf and Alexander Smola.

GRETTON, BORGWARDT, RASCH, SCH

¨

OLKOPF AND SMOLA

Keywords: kernel methods, two-sample test, uniform convergence bounds, schema matching,

integral probability metric, hypothesis testing

1. Introduction

We address the problem of comparing samples from two probability distributions, by proposing

statistical tests of the null hypothesis that these distributions are equal against the alternative hy-

pothesis that these distributions are different (this is called the two-sample problem). Such tests

have application in a variety of areas. In bioinformatics, it is of interest to compare microarray

data from identical tissue types as measured by different laboratories, to detect whether the data

may be analysed jointly, or whether differences in experimental procedure have caused systematic

differences in the data distributions. Equally of interest are comparisons between microarray data

from different tissue types, either to determine whether two subtypes of cancer may be treated as

statistically indistinguishable from a diagnosis perspective, or to detect differences in healthy and

cancerous tissue. In database attribute matching, it is desirable to merge databases containing mul-

tiple fields, where it is not known in advance which fields correspond: the fields are matched by

maximising the similarity in the distributions of their entries.

We test whether distributions p and q are different on the basis of samples drawn from each of

them, by finding a well behaved (e.g., smooth) function which is large on the points drawn from p,

and small (as negative as possible) on the points from q. We use as our test statistic the difference

between the mean function values on the two samples; when this is large, the samples are likely

from different distributions. We call this test statistic the Maximum Mean Discrepancy (MMD).

Clearly the quality of the MMD as a statistic depends on the class F of smooth functions that

define it. On one hand, F must be “rich enough” so that the population MMD vanishes if and only

if p = q. On the other hand, for the test to be consistent in power, F needs to be “restrictive” enough

for the empirical estimate of the MMD to converge quickly to its expectation as the sample size

increases. We will use the unit balls in characteristic reproducing kernel Hilbert spaces (Fukumizu

et al., 2008; Sriperumbudur et al., 2010b) as our function classes, since these will be shown to satisfy

both of the foregoing properties. We also review classical metrics on distributions, namely the

Kolmogorov-Smirnov and Earth-Mover’s distances, which are based on different function classes;

collectively these are known as integral probability metrics (M

¨

uller, 1997). On a more practical

note, the MMD has a reasonable computational cost, when compared with other two-sample tests:

given m points sampled from p and n from q, the cost is O(m + n)

2

time. We also propose a test

statistic with a computational cost of O(m+n): the associated test can achieve a given Type II error

at a lower overall computational cost than the quadratic-cost test, by looking at a larger volume of

data.

We define three nonparametric statistical tests based on the MMD. The first two tests are

distribution-free, meaning they make no assumptions regarding p and q, albeit at the expense of

being conservative in detecting differences between the distributions. The third test is based on the

asymptotic distribution of the MMD, and is in practice more sensitive to differences in distribution at

small sample sizes. The present work synthesizes and expands on results of Gretton et al. (2007a,b)

and Smola et al. (2007),

1

who in turn build on the earlier work of Borgwardt et al. (2006). Note that

1. In particular, most of the proofs here were not provided by Gretton et al. (2007a), but in an accompanying technical

report (Gretton et al., 2008a), which this document replaces.

724

A KERNEL TWO-SAMPLE TEST

the latter addresses only the third kind of test, and that the approach of Gretton et al. (2007a,b) is

rigorous in its treatment of the asymptotic distribution of the test statistic under the null hypothesis.

We begin our presentation in Section 2 with a formal definition of the MMD. We review the

notion of a characteristic RKHS, and establish that when F is a unit ball in a characteristic RKHS,

then the population MMD is zero if and only if p = q. We further show that universal RKHSs in

the sense of Steinwart (2001) are characteristic. In Section 3, we give an overview of hypothesis

testing as it applies to the two-sample problem, and review alternative test statistics, including the

L

2

distance between kernel density estimates (Anderson et al., 1994), which is the prior approach

closest to our work. We present our first two hypothesis tests in Section 4, based on two different

bounds on the deviation between the population and empirical MMD. We take a different approach

in Section 5, where we use the asymptotic distribution of the empirical MMD estimate as the basis

for a third test. When large volumes of data are available, the cost of computing the MMD (quadratic

in the sample size) may be excessive: we therefore propose in Section 6 a modified version of the

MMD statistic that has a linear cost in the number of samples, and an associated asymptotic test.

In Section 7, we provide an overview of methods related to the MMD in the statistics and machine

learning literature. We also review alternative function classes for which the MMD defines a metric

on probability distributions. Finally, in Section 8, we demonstrate the performance of MMD-based

two-sample tests on problems from neuroscience, bioinformatics, and attribute matching using the

Hungarian marriage method. Our approach performs well on high dimensional data with low sample

size; in addition, we are able to successfully distinguish distributions on graph data, for which ours

is the first proposed test.

A Matlab implementation of the tests is at www.gatsby.ucl.ac.uk/ ∼ gretton/mmd/mmd.htm.

2. The Maximum Mean Discrepancy

In this section, we present the maximum mean discrepancy (MMD), and describe conditions under

which it is a metric on the space of probability distributions. The MMD is defined in terms of

particular function spaces that witness the difference in distributions: we therefore begin in Section

2.1 by introducing the MMD for an arbitrary function space. In Section 2.2, we compute both the

population MMD and two empirical estimates when the associated function space is a reproducing

kernel Hilbert space, and in Section 2.3 we derive the RKHS function that witnesses the MMD for

a given pair of distributions.

2.1 Definition of the Maximum Mean Discrepancy

Our goal is to formulate a statistical test that answers the following question:

Problem 1 Let x and y be random variables defined on a topological space X, with respective

Borel probability measures p and q . Given observations X :=

{

x

1

,.. .,x

m

}

and Y :=

{

y

1

,.. .,y

n

}

,

independently and identically distributed (i.i.d.) from p and q, respectively, can we decide whether

p 6= q?

Where there is no ambiguity, we use the shorthand notation E

x

[ f(x)] := E

x∼p

[ f(x)] and E

y

[ f(y)] :=

E

y∼q

[ f(y)] to denote expectations with respect to p and q, respectively, where x ∼ p indicates x has

distribution p. To start with, we wish to determine a criterion that, in the population setting, takes

on a unique and distinctive value only when p = q. It will be defined based on Lemma 9.3.2 of

Dudley (2002).

725

GRETTON, BORGWARDT, RASCH, SCH

¨

OLKOPF AND SMOLA

Lemma 1 Let (X,d) be a metric space, and let p,q be two Borel probability measures defined on

X. Then p = q if and only if E

x

( f(x)) = E

y

( f(y)) for all f ∈ C(X), where C(X) is the space of

bounded continuous functions on X.

AlthoughC(X) in principle allows us to identify p = q uniquely, it is not practical to work with such

a rich function class in the finite sample setting. We thus define a more general class of statistic, for

as yet unspecified function classes F, to measure the disparity between p and q (Fortet and Mourier,

1953; M

¨

uller, 1997).

Definition 2 Let F be a class of functions f : X → R and let p, q,x,y, X,Y be defined as above. We

define the maximum mean discrepancy (MMD) as

MMD[F, p,q] := sup

f∈F

(E

x

[ f(x)] −E

y

[ f(y)]). (1)

In the statistics literature, this is known as an integral probability metric (M

¨

uller, 1997). A biased

2

empirical estimate of the MMD is obtained by replacing the population expectations with empirical

expectations computed on the samples X and Y,

MMD

b

[F,X,Y] := sup

f∈F

1

m

m

∑

i=1

f(x

i

) −

1

n

n

∑

i=1

f(y

i

)

!

. (2)

We must therefore identify a function class that is rich enough to uniquely identify whether p = q,

yet restrictive enough to provide useful finite sample estimates (the latter property will be established

in subsequent sections).

2.2 The MMD in Reproducing Kernel Hilbert Spaces

In the present section, we propose as our MMD function class F the unit ball in a reproducing kernel

Hilbert space H. We will provide finite sample estimates of this quantity (both biased and unbiased),

and establish conditions under which the MMD can be used to distinguish between probability

measures. Other possible function classes F are discussed in Sections 7.1 and 7.2.

We first review some properties of H (Sch

¨

olkopf and Smola, 2002). Since H is an RKHS, the

operator of evaluation δ

x

mapping f ∈ H to f(x) ∈ R is continuous. Thus, by the Riesz represen-

tation theorem (Reed and Simon, 1980, Theorem II.4), there is a feature mapping φ(x) from X to

R such that f (x) =

h

f,φ(x)

i

H

. This feature mapping takes the canonical form φ(x) = k(x, ·) (Stein-

wart and Christmann, 2008, Lemma 4.19), where k(x

1

,x

2

) : X ×X → R is positive definite, and

the notation k(x,·) indicates the kernel has one argument fixed at x, and the second free. Note in

particular that

h

φ(x),φ(y)

i

H

= k(x, y). We will generally use the more concise notation φ(x) for the

feature mapping, although in some cases it will be clearer to write k(x, ·).

We next extend the notion of feature map to the embedding of a probability distribution: we

will define an element µ

p

∈ H such that E

x

f =

h

f,µ

p

i

H

for all f ∈ H, which we call the mean

embedding of p. Embeddings of probability measures into reproducing kernel Hilbert spaces are

well established in the statistics literature: see Berlinet and Thomas-Agnan (2004, Chapter 4) for

further detail and references. We begin by establishing conditions under which the mean embedding

µ

p

exists (Fukumizu et al., 2004, p. 93), (Sriperumbudur et al., 2010b, Theorem 1).

2. The empirical MMD defined below has an upward bias—we will define an unbiased statistic in the following section.

726

A KERNEL TWO-SAMPLE TEST

Lemma 3 If k(·,·) is measurable and E

x

p

k(x, x) < ∞ then µ

p

∈ H.

Proof The linear operator T

p

f := E

x

f for all f ∈ F is bounded under the assumption, since

|

T

p

f

|

=

|

E

x

f

|

≤ E

x

|

f

|

= E

x

|h

f,φ(x)

i

H

|

≤ E

x

p

k(x, x)

k

f

k

H

.

Hence by the Riesz representer theorem, there exists a µ

p

∈ H such that T

p

f =

h

f,µ

p

i

H

. If we set

f = φ(t) = k(t,·), we obtain µ

p

(t) =

h

µ

p

,k(t,·)

i

H

= E

x

k(t, x): in other words, the mean embedding

of the distribution p is the expectation under p of the canonical feature map.

We next show that the MMD may be expressed as the distance in H between mean embeddings

(Borgwardt et al., 2006).

Lemma 4 Assume the condition in Lemma 3 for the existence of the mean embeddings µ

p

, µ

q

is

satisfied. Then

MMD

2

[F, p, q] =

µ

p

−µ

q

2

H

.

Proof

MMD

2

[F, p, q] =

"

sup

k

f

k

H

≤1

(E

x

[ f(x)] −E

y

[ f(y)])

#

2

=

"

sup

k

f

k

H

≤1

µ

p

−µ

q

, f

H

#

2

=

µ

p

−µ

q

2

H

.

We now establish a condition on the RKHS H under which the mean embedding µ

p

is injective,

which indicates that MMD[F, p, q] = 0 is a metric

3

on the Borel probability measures on X. Evi-

dently, this property will not hold for all H: for instance, a polynomial RKHS of degree two cannot

distinguish between distributions with the same mean and variance, but different kurtosis (Sriperum-

budur et al., 2010b, Example 3). The MMD is a metric, however, when H is a universal RKHSs,

defined on a compact metric space X. Universality requires that k(·,·) be continuous, and H be

dense in C(X) with respect to the L

∞

norm. Steinwart (2001) proves that the Gaussian and Laplace

RKHSs are universal.

Theorem 5 Let F be a unit ball in a universal RKHS H, defined on the compact metric space X,

with associated continuous kernel k(·,·). Then MMD[F, p, q] = 0 if and only if p = q.

Proof The proof follows Cortes et al. (2008, Supplementary Appendix), whose approach is clearer

than the original proof of Gretton et al. (2008a, p. 4).

4

First, it is clear that p = q implies

3. According to Dudley (2002, p. 26) a metric d(x,y) satisfies the following four properties: symmetry, triangle in-

equality, d(x,x) = 0, and d(x, y) = 0 =⇒ x = y. A pseudo-metric only satisfies the first three properties.

4. Note that the proof of Cortes et al. (2008) requires an application the of dominated convergence theorem, rather than

using the Riesz representation theorem to show the existence of the mean embeddings µ

p

and µ

q

as we did in Lemma

3.

727

GRETTON, BORGWARDT, RASCH, SCH

¨

OLKOPF AND SMOLA

MMD

{

F, p, q

}

is zero. We now prove the converse. By the universality of H, for any given ε > 0

and f ∈C(X) there exists a g ∈ H such that

k

f −g

k

∞

≤ ε.

We next make the expansion

|

E

x

f(x) −E

y

( f(y))

|

≤

|

E

x

f(x) −E

x

g(x)

|

+

|

E

x

g(x) −E

y

g(y)

|

+

|

E

y

g(y) −E

y

f(y)

|

.

The first and third terms satisfy

|

E

x

f(x) −E

x

g(x)

|

≤ E

x

|

f(x) −g(x)

|

≤ ε.

Next, write

E

x

g(x) −E

y

g(y) =

g,µ

p

−µ

q

H

= 0,

since MMD

{

F, p, q

}

= 0 implies µ

p

= µ

q

. Hence

|

E

x

f(x) −E

y

( f(y))

|

≤ 2ε

for all f ∈C(X) and ε > 0, which implies p = q by Lemma 1.

While our result establishes the mapping µ

p

is injective for universal kernels on compact domains,

this result can also be shown in more general cases. Fukumizu et al. (2008) introduces the notion

of characteristic kernels, these being kernels for which the mean map is injective. Fukumizu et al.

establish that Gaussian and Laplace kernels are characteristic on R

d

, and thus that the associated

MMD is a metric on distributions for this domain. Sriperumbudur et al. (2008, 2010b) and Sripe-

rumbudur et al. (2011a) further explore the properties of characteristic kernels, providing a simple

condition to determine whether translation invariant kernels are characteristic, and investigating the

relation between universal and characteristic kernels on non-compact domains.

Given we are in an RKHS, we may easily obtain of the squared MMD,

µ

p

−µ

q

2

H

, in terms of

kernel functions, and a corresponding unbiased finite sample estimate.

Lemma 6 Given x and x

′

independent random variables with distribution p, and y and y

′

indepen-

dent random variables with distribution q, the squared population MMD is

MMD

2

[F, p, q] = E

x,x

′

k(x, x

′

)

−2E

x,y

[k(x, y)]+ E

y,y

′

k(y, y

′

)

,

where x

′

is an independent copy of x with the same distribution, and y

′

is an independent copy of y.

An unbiased empirical estimate is a sum of two U-statistics and a sample average,

MMD

2

u

[F,X,Y] =

1

m(m−1)

m

∑

i=1

m

∑

j6=i

k(x

i

,x

j

) +

1

n(n−1)

n

∑

i=1

n

∑

j6=i

k(y

i

,y

j

)

−

2

mn

m

∑

i=1

n

∑

j=1

k(x

i

,y

j

). (3)

When m = n, a slightly simpler empirical estimate may be used. Let Z := (z

1

,.. .,z

m

) be m i.i.d.

random variables, where z := (x,y) ∼ p×q (i.e., x and y are independent). An unbiased estimate of

MMD

2

is

MMD

2

u

[F,X,Y] =

1

(m)(m−1)

m

∑

i6= j

h(z

i

,z

j

), (4)

728

A KERNEL TWO-SAMPLE TEST

which is a one-sample U-statistic with

h(z

i

,z

j

) := k(x

i

,x

j

) + k(y

i

,y

j

) −k(x

i

,y

j

) −k(x

j

,y

i

).

Proof Starting from the expression for MMD

2

[F, p, q] in Lemma 4,

MMD

2

[F, p, q] =

µ

p

−µ

q

2

H

=

h

µ

p

,µ

p

i

H

+

µ

q

,µ

q

H

−2

µ

p

,µ

q

H

= E

x,x

′

φ(x),φ(x

′

)

H

+ E

y,y

′

φ(y),φ(y

′

)

H

−2E

x,y

h

φ(x),φ(y)

i

H

,

The proof is completed by applying

h

φ(x),φ(x

′

)

i

H

= k(x, x

′

); the empirical estimates follow straight-

forwardly, by replacing the population expectations with their corresponding U-statistics and sample

averages. This statistic is unbiased following Serfling (1980, Chapter 5).

Note that MMD

2

u

may be negative, since it is an unbiased estimator of (MMD[F, p, q])

2

. The only

terms missing to ensure nonnegativity, however, are h(z

i

,z

i

), which were removed to remove spuri-

ous correlations between observations. Consequently we have the bound

MMD

2

u

+

1

m(m−1)

m

∑

i=1

k(x

i

,x

i

) + k(y

i

,y

i

) −2k(x

i

,y

i

) ≥ 0.

Moreover, while the empirical statistic for m = n is an unbiased estimate of MMD

2

, it does not have

minimum variance, since we ignore the cross-terms k(x

i

,y

i

), of which there are O(n). From (3),

however, we see the minimum variance estimate is almost identical (Serfling, 1980, Section 5.1.4).

The biased statistic in (2) may also be easily computed following the above reasoning. Substi-

tuting the empirical estimates µ

X

:=

1

m

∑

m

i=1

φ(x

i

) and µ

Y

:=

1

n

∑

n

i=1

φ(y

i

) of the feature space means

based on respective samples X and Y, we obtain

MMD

b

[F,X,Y] =

"

1

m

2

m

∑

i, j=1

k(x

i

,x

j

) −

2

mn

m,n

∑

i, j=1

k(x

i

,y

j

) +

1

n

2

n

∑

i, j=1

k(y

i

,y

j

)

#

1

2

. (5)

Note that the U-statistics of (3) have been replaced by V-statistics. Intuitively we expect the empir-

ical test statistic MMD[F,X,Y], whether biased or unbiased, to be small if p = q, and large if the

distributions are far apart. It costs O((m+ n)

2

) time to compute both statistics.

2.3 Witness Function of the MMD for RKHSs

We define the witness function f

∗

to be the RKHS function attaining the supremum in (1), and

its empirical estimate

ˆ

f

∗

to be the function attaining the supremum in (2). From the reasoning in

Lemma 4, it is clear that

f

∗

(t) ∝

φ(t),µ

p

−µ

q

H

= E

x

[k(x,t)] −E

y

[k(y,t)],

ˆ

f

∗

(t) ∝

h

φ(t),µ

X

−µ

Y

i

H

=

1

m

∑

m

i=1

k(x

i

,t) −

1

n

∑

n

i=1

k(y

i

,t).

where we have defined µ

X

= m

−1

∑

m

i=1

φ(x

i

), and µ

Y

by analogy. The result follows since the unit

vector v maximizing

h

v, x

i

H

in a Hilbert space is v = x/

k

x

k

H

.

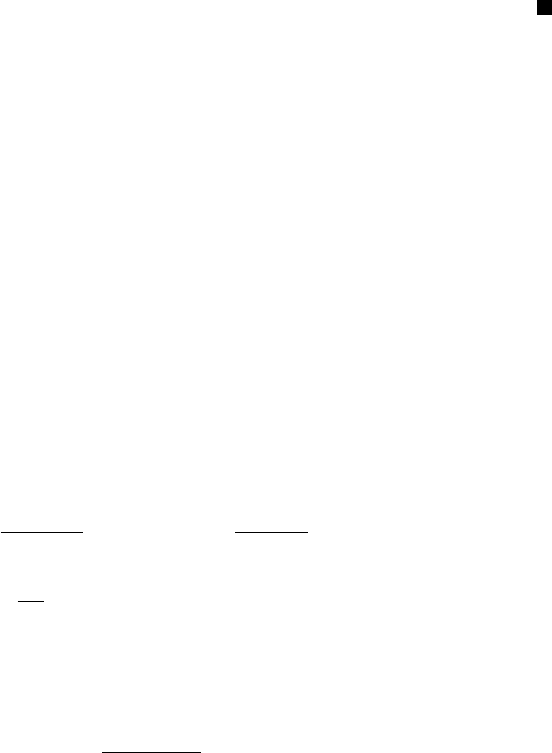

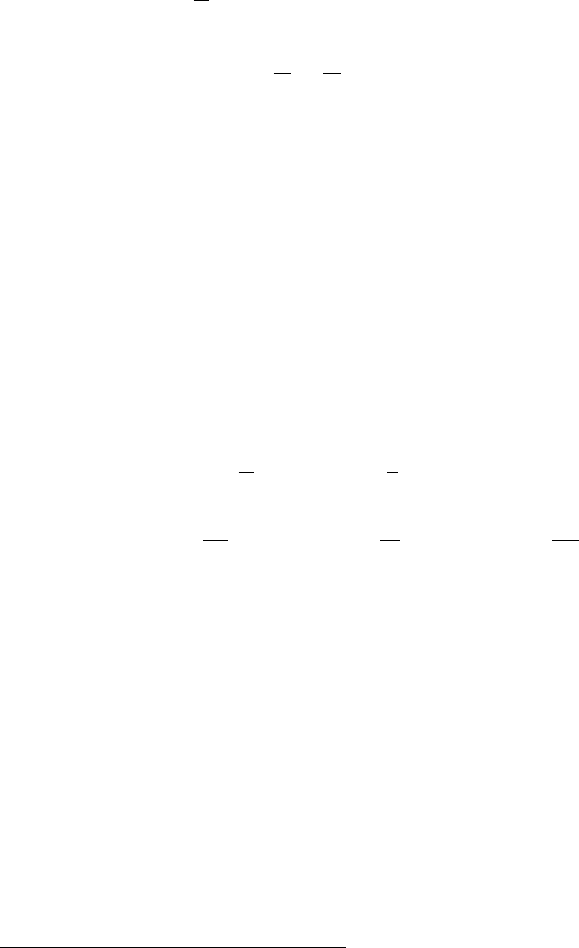

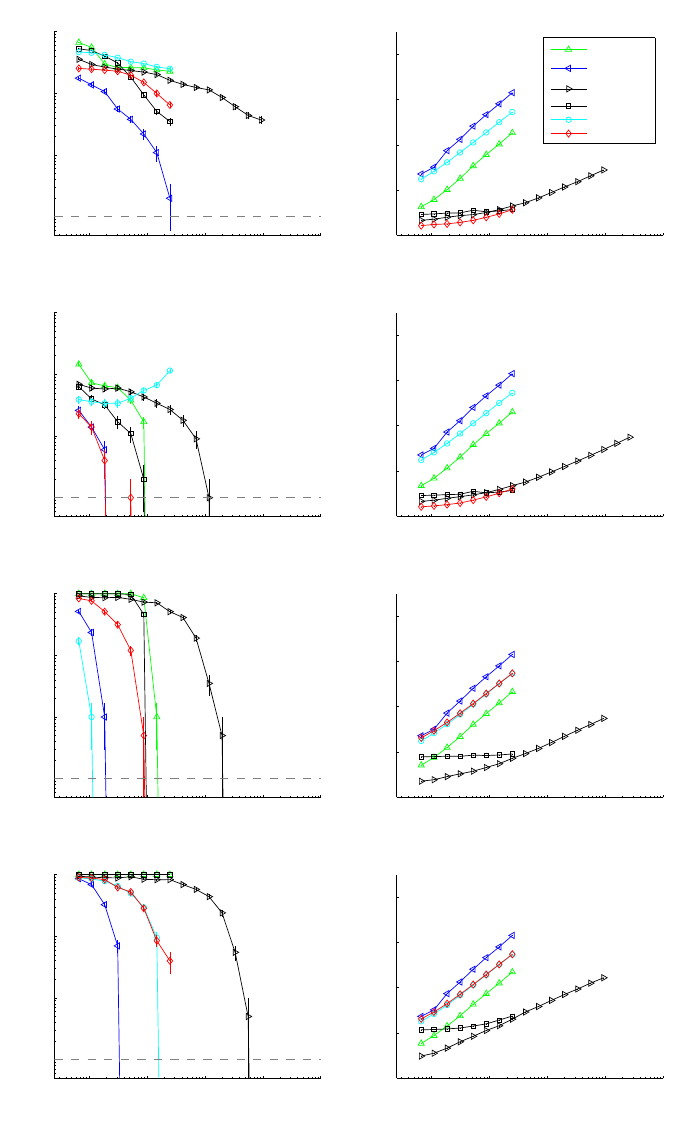

We illustrate the behavior of MMD in Figure 1 using a one-dimensional example. The data X

and Y were generated from distributions p and q with equal means and variances, with p Gaussian

729

GRETTON, BORGWARDT, RASCH, SCH

¨

OLKOPF AND SMOLA

−6 −4 −2 0 2 4 6

−0.6

−0.4

−0.2

0

0.2

0.4

0.6

0.8

t

Prob. densities and

ˆ

f

∗

(t)

ˆ

f

∗

p (Gau ss)

q (L aplace)

Figure 1: Illustration of the function maximizing the mean discrepancy in the case where a Gaussian

is being compared with a Laplace distribution. Both distributions have zero mean and unit

variance. The function

ˆ

f

∗

that witnesses the MMD has been scaled for plotting purposes,

and was computed empirically on the basis of 2×10

4

samples, using a Gaussian kernel

with σ = 0.5.

and q Laplacian. We chose F to be the unit ball in a Gaussian RKHS. The empirical estimate

ˆ

f

∗

of the function f

∗

that witnesses the MMD—in other words, the function maximizing the mean

discrepancy in (1)—is smooth, negative where the Laplace density exceeds the Gaussian density (at

the center and tails), and positive where the Gaussian density is larger. The magnitude of

ˆ

f

∗

is a

direct reflection of the amount by which one density exceeds the other, insofar as the smoothness

constraint permits it.

3. Background Material

We now present three background results. First, we introduce the terminology used in statistical

hypothesis testing. Second, we demonstrate via an example that even for tests which have asymp-

totically no error, we cannot guarantee performance at any fixed sample size without making as-

sumptions about the distributions. Third, we review some alternative statistics used in comparing

distributions, and the associated two-sample tests (see also Section 7 for an overview of additional

integral probability metrics).

3.1 Statistical Hypothesis Testing

Having described a metric on probability distributions (the MMD) based on distances between their

Hilbert space embeddings, and empirical estimates (biased and unbiased) of this metric, we address

the problem of determining whether the empirical MMD shows a statistically significant difference

between distributions. To this end, we briefly describe the framework of statistical hypothesis testing

as it applies in the present context, following Casella and Berger (2002, Chapter 8). Given i.i.d.

730

A KERNEL TWO-SAMPLE TEST

samples X ∼ p of size m and Y ∼q of size n, the statistical test, T(X,Y) : X

m

×X

n

7→{0,1} is used

to distinguish between the null hypothesis H

0

: p = q and the alternative hypothesis H

A

: p 6= q.

This is achieved by comparing the test statistic

5

MMD[F,X,Y] with a particular threshold: if the

threshold is exceeded, then the test rejects the null hypothesis (bearing in mind that a zero population

MMD indicates p = q). The acceptance region of the test is thus defined as the set of real numbers

below the threshold. Since the test is based on finite samples, it is possible that an incorrect answer

will be returned. A Type I error is made when p = q is rejected based on the observed samples,

despite the null hypothesis having generated the data. Conversely, a Type II error occurs when

p = q is accepted despite the underlying distributions being different. The level α of a test is an

upper bound on the probability of a Type I error: this is a design parameter of the test which must

be set in advance, and is used to determine the threshold to which we compare the test statistic

(finding the test threshold for a given α is the topic of Sections 4 and 5). The power of a test

against a particular member of the alternative class H

A

(i.e., a specific (p,q) such that p 6= q) is the

probability of wrongly accepting p = q in this instance. A consistent test achieves a level α, and a

Type II error of zero, in the large sample limit. We will see that the tests proposed in this paper are

consistent.

3.2 A Negative Result

Even if a test is consistent, it is not possible to distinguish distributions with high probability at a

given, fixed sample size (i.e., to provide guarantees on the Type II error), without prior assumptions

as to the nature of the difference between p and q. This is true regardless of the two-sample test

used. There are several ways to illustrate this, which each give insight into the kinds of differences

that might be undetectable for a given number of samples. The following example

6

is one such

illustration.

Example 1 Assume we have a distribution p from which we have drawn m i.i.d. observations.

We construct a distribution q by drawing m

2

i.i.d. observations from p, and defining a discrete

distribution over these m

2

instances with probability m

−2

each. It is easy to check that if we now

draw m observations from q, there is at least a

m

2

m

m!

m

2m

> 1−e

−1

> 0.63 probability that we thereby

obtain an m sample from p. Hence no test will be able to distinguish samples from p and q in this

case. We could make the probability of detection arbitrarily small by increasing the size of the

sample from which we construct q.

3.3 Previous Work

We next give a brief overview of some earlier approaches to the two sample problem for multivariate

data. Since our later experimental comparison is with respect to certain of these methods, we give

abbreviated algorithm names in italics where appropriate: these should be used as a key to the tables

in Section 8.

5. This may be biased or unbiased.

6. This is a variation of a construction for independence tests, which was suggested in a private communication by John

Langford.

731

GRETTON, BORGWARDT, RASCH, SCH

¨

OLKOPF AND SMOLA

3.3.1 L

2

DISTANCE BETWEEN PARZEN WINDOW ESTIMATES

The prior work closest to the current approach is the Parzen window-based statistic of Anderson

et al. (1994). We begin with a short overview of the Parzen window estimate and its properties

(Silverman, 1986), before proceeding to a comparison with the RKHS approach. We assume a

distribution p on R

d

, which has an associated density function f

p

. The Parzen window estimate of

this density from an i.i.d. sample X of size m is

ˆ

f

p

(x) =

1

m

m

∑

i=1

κ(x

i

−x), where κ satisfies

Z

X

κ(x)dx = 1 and κ(x) ≥ 0.

We may rescale κ according to

1

h

d

m

κ

x

h

m

for a bandwidth parameter h

m

. To simplify the discussion,

we use a single bandwidth h

m+n

for both

ˆ

f

p

and

ˆ

f

q

. Assuming m/n is bounded away from zero and

infinity, consistency of the Parzen window estimates for f

p

and f

q

requires

lim

m,n→∞

h

d

m+n

= 0 and lim

m,n→∞

(m+ n)h

d

m+n

= ∞. (6)

We now show the L

2

distance between Parzen windows density estimates is a special case of the bi-

ased MMD in Equation (5). Denote by D

r

(p,q) :=

f

p

− f

q

r

the L

r

distance between the densities

f

p

and f

q

corresponding to the distributions p and q, respectively. For r = 1 the distance D

r

(p,q) is

known as the L

´

evy distance (Feller, 1971), and for r = 2 we encounter a distance measure derived

from the Renyi entropy (Gokcay and Principe, 2002). Assume that

ˆ

f

p

and

ˆ

f

q

are given as kernel

density estimates with kernel κ(x −x

′

), that is,

ˆ

f

p

(x) = m

−1

∑

m

i=1

κ(x

i

−x) and

ˆ

f

q

(y) is defined by

analogy. In this case

D

2

(

ˆ

f

p

,

ˆ

f

q

)

2

=

Z

"

1

m

m

∑

i=1

κ(x

i

−z) −

1

n

n

∑

i=1

κ(y

i

−z)

#

2

dz

=

1

m

2

m

∑

i, j=1

k(x

i

−x

j

) +

1

n

2

n

∑

i, j=1

k(y

i

−y

j

) −

2

mn

m,n

∑

i, j=1

k(x

i

−y

j

),

where k(x−y) =

R

κ(x−z)κ(y−z)dz. By its definition k(x−y) is an RKHS kernel, as it is an inner

product between κ(x−z) and κ(y−z) on the domain X.

We now describe the asymptotic performance of a two-sample test using the statistic D

2

(

ˆ

f

p

,

ˆ

f

q

)

2

.

We consider the power of the test under local departures from the null hypothesis. Anderson et al.

(1994) define these to take the form

f

q

= f

p

+ δg, (7)

where δ ∈R, and g is a fixed, bounded, integrable function chosen to ensure that f

q

is a valid density

for sufficiently small

|

δ

|

. Anderson et al. consider two cases: the kernel bandwidth converging to

zero with increasing sample size, ensuring consistency of the Parzen window estimates of f

p

and

f

q

; and the case of a fixed bandwidth. In the former case, the minimum distance with which the test

can discriminate f

p

from f

q

is

7

δ = (m+ n)

−1/2

h

−d/2

m+n

. In the latter case, this minimum distance is

δ = (m + n)

−1/2

, under the assumption that the Fourier transform of the kernel κ does not vanish

7. Formally, define s

α

as a threshold for the statistic D

2

ˆ

f

p

,

ˆ

f

q

2

, chosen to ensure the test has level α, and let δ =

(m + n)

−1/2

h

−d/2

m+n

c for some fixed c 6= 0. When m,n → ∞ such that m/n is bounded away from 0 and ∞, and

732

A KERNEL TWO-SAMPLE TEST

on an interval (Anderson et al., 1994, Section 2.4), which implies the kernel k is characteristic

(Sriperumbudur et al., 2010b). The power of the L

2

test against local alternatives is greater when

the kernel is held fixed, since for any rate of decrease of h

m+n

with increasing sample size, δ will

decrease more slowly than for a fixed kernel.

An RKHS-based approach generalizes the L

2

statistic in a number of important respects. First,

we may employ a much larger class of characteristic kernels that cannot be written as inner products

between Parzen windows: several examples are given by Steinwart (2001, Section 3) and Micchelli

et al. (2006, Section 3) (these kernels are universal, hence characteristic). We may further generalize

to kernels on structured objects such as strings and graphs (Sch

¨

olkopf et al., 2004), as done in our

experiments (Section 8). Second, even when the kernel may be written as an inner product of

Parzen windows on R

d

, the D

2

2

statistic with fixed bandwidth no longer converges to an L

2

distance

between probability density functions, hence it is more natural to define the statistic as an integral

probability metric for a particular RKHS, as in Definition 2. Indeed, in our experiments, we obtain

good performance in experimental settings where the dimensionality greatly exceeds the sample

size, and density estimates would perform very poorly

8

(for instance the Gaussian toy example

in Figure 5B, for which performance actually improves when the dimensionality increases; and the

microarray data sets in Table 1). This suggests it is not necessary to solve the more difficult problem

of density estimation in high dimensions to do two-sample testing.

Finally, the kernel approach leads us to establish consistency against a larger class of local

alternatives to the null hypothesis than that considered by Anderson et al. In Theorem 13, we prove

consistency against a class of alternatives encoded in terms of the mean embeddings of p and q,

which applies to any domain on which RKHS kernels may be defined, and not only densities on R

d

.

This more general approach also has interesting consequences for distributions on R

d

: for instance,

a local departure from H

0

occurs when p and q differ at increasing frequencies in their respective

characteristic functions. This class of local alternatives cannot be expressed in the form δg for fixed

g, as in (7). We discuss this issue further in Section 5.

3.3.2 MMD FOR MULTINOMIALS

Assume a finite domain X := {1,.. ., d}, and define the random variables x and y on X such that

p

i

:= P(x = i) and q

j

:= P(y = j). We embed x into an RKHS H via the feature mapping φ(x) := e

x

,

where e

s

is the unit vector in R

d

taking value 1 in dimension s, and zero in the remaining entries.

The kernel is the usual inner product on R

d

. In this case,

MMD

2

[F, p, q] =

k

p−q

k

2

R

d

=

d

∑

i=1

(p

i

−q

i

)

2

. (8)

Harchaoui et al. (2008, Section 1, long version) note that this L

2

statistic may not be the best choice

for finite domains, citing a result of Lehmann and Romano (2005, Theorem 14.3.2) that Pearson’s

assuming conditions (6), the limit

π(c) := lim

(m+n)→∞

Pr

H

A

D

2

ˆ

f

p

,

ˆ

f

q

2

> s

α

is well-defined, and satisfies α < π(c) < 1 for 0 < |c| < ∞, and π(c) → 1 as c → ∞.

8. The L

2

error of a kernel density estimate converges as O(n

−4/(4+d)

) when the optimal bandwidth is used (Wasserman,

2006, Section 6.5).

733

GRETTON, BORGWARDT, RASCH, SCH

¨

OLKOPF AND SMOLA

Chi-squared statistic is optimal for the problem of goodness of fit testing for multinomials.

9

It would

be of interest to establish whether an analogous result holds for two-sample testing in a wider class

of RKHS feature spaces.

3.3.3 FURTHER MULTIVARIATE TWO-SAMPLE TESTS

Biau and Gyorfi (2005) (Biau) use as their test statistic the L

1

distance between discretized esti-

mates of the probabilities, where the partitioning is refined as the sample size increases. This space

partitioning approach becomes difficult or impossible for high dimensional problems, since there

are too few points per bin. For this reason, we use this test only for low-dimensional problems in

our experiments.

A generalisation of the Wald-Wolfowitz runs test to the multivariate domain was proposed and

analysed by Friedman and Rafsky (1979) and Henze and Penrose (1999) (FR Wolf), and involves

counting the number of edges in the minimum spanning tree over the aggregated data that connect

points in X to points in Y. The resulting test relies on the asymptotic normality of the test statistic,

and is not distribution-free under the null hypothesis for finite samples (the test threshold depends

on p, as with our asymptotic test in Section 5; by contrast, our tests in Section 4 are distribution-

free). The computational cost of this method using Kruskal’s algorithm is O((m+ n)

2

log(m+ n)),

although more modern methods improve on the log(m+ n) term: see Chazelle (2000) for details.

Friedman and Rafsky (1979) claim that calculating the matrix of distances, which costs O((m+n)

2

),

dominates their computing time; we return to this point in our experiments (Section 8). Two possible

generalisations of the Kolmogorov-Smirnov test to the multivariate case were studied by Bickel

(1969) and Friedman and Rafsky (1979). The approach of Friedman and Rafsky (FR Smirnov) in

this case again requires a minimal spanning tree, and has a similar cost to their multivariate runs

test.

A more recent multivariate test was introduced by Rosenbaum (2005). This entails computing

the minimum distance non-bipartite matching over the aggregate data, and using the number of pairs

containing a sample from both X and Y as a test statistic. The resulting statistic is distribution-free

under the null hypothesis at finite sample sizes, in which respect it is superior to the Friedman-

Rafsky test; on the other hand, it costs O((m + n)

3

) to compute. Another distribution-free test

(Hall) was proposed by Hall and Tajvidi (2002): for each point from p, it requires computing the

closest points in the aggregated data, and counting how many of these are from q (the procedure is

repeated for each point from q with respect to points from p). As we shall see in our experimental

comparisons, the test statistic is costly to compute; Hall and Tajvidi consider only tens of points in

their experiments.

4. Tests Based on Uniform Convergence Bounds

In this section, we introduce two tests for the two-sample problem that have exact performance

guarantees at finite sample sizes, based on uniform convergence bounds. The first, in Section 4.1,

uses the McDiarmid (1989) bound on the biased MMD statistic, and the second, in Section 4.2, uses

a Hoeffding (1963) bound for the unbiased statistic.

9. A goodness of fit test determines whether a sample from p is drawn from a known target multinomial q. Pearson’s

Chi-squared statistic weights each term in the sum (8) by its corresponding q

−1

i

.

734

A KERNEL TWO-SAMPLE TEST

4.1 Bound on the Biased Statistic and Test

We establish two properties of the MMD, from which we derive a hypothesis test. First, we show

that regardless of whether or not p = q, the empirical MMD converges in probability at rate O((m+

n)

−

1

2

) to its population value. This shows the consistency of statistical tests based on the MMD.

Second, we give probabilistic bounds for large deviations of the empirical MMD in the case p = q.

These bounds lead directly to a threshold for our first hypothesis test. We begin by establishing the

convergence of MMD

b

[F,X,Y] to MMD[F, p,q]. The following theorem is proved in A.2.

Theorem 7 Let p,q, X,Y be defined as in Problem 1, and assume 0 ≤k(x,y) ≤ K. Then

Pr

X,Y

n

|

MMD

b

[F,X,Y]−MMD[F, p,q]

|

> 2

(K/m)

1

2

+ (K/n)

1

2

+ ε

o

≤ 2exp

−ε

2

mn

2K(m+n)

,

where Pr

X,Y

denotes the probability over the m-sample X and n-sample Y.

Our next goal is to refine this result in a way that allows us to define a test threshold under the null

hypothesis p = q. Under this circumstance, the constants in the exponent are slightly improved. The

following theorem is proved in Appendix A.3.

Theorem 8 Under the conditions of Theorem 7 where additionally p = q and m = n,

MMD

b

[F,X,Y] ≤ m

−

1

2

q

2E

x,x

′

[k(x, x) −k(x, x

′

)]

|

{z }

B

1

(F,p)

+ ε ≤ (2K/m)

1/2

|

{z }

B

2

(F,p)

+ ε,

both with probability at least 1−exp

−

ε

2

m

4K

.

In this theorem, we illustrate two possible bounds B

1

(F, p) and B

2

(F, p) on the bias in the empirical

estimate (5). The first inequality is interesting inasmuch as it provides a link between the bias bound

B

1

(F, p) and kernel size (for instance, if we were to use a Gaussian kernel with large σ, then k(x,x)

and k(x,x

′

) would likely be close, and the bias small). In the context of testing, however, we would

need to provide an additional bound to show convergence of an empirical estimate of B

1

(F, p) to its

population equivalent. Thus, in the following test for p = q based on Theorem 8, we use B

2

(F, p)

to bound the bias.

10

Corollary 9 A hypothesis test of level α for the null hypothesis p = q, that is, for MMD[F, p, q] = 0,

has the acceptance region MMD

b

[F,X,Y] <

p

2K/m

1+

p

2logα

−1

.

We emphasize that this test is distribution-free: the test threshold does not depend on the particular

distribution that generated the sample. Theorem 7 guarantees the consistency of the test against fixed

alternatives, and that the Type II error probability decreases to zero at rate O

m

−1/2

, assuming m =

n. To put this convergence rate in perspective, consider a test of whether two normal distributions

have equal means, given they have unknown but equal variance (Casella and Berger, 2002, Exercise

8.41). In this case, the test statistic has a Student-t distribution with n+ m−2 degrees of freedom,

and its Type II error probability converges at the same rate as our test.

It is worth noting that bounds may be obtained for the deviation between population mean

embeddings µ

p

and the empirical embeddings µ

X

in a completely analogous fashion. The proof

10. Note that we use a tighter bias bound than Gretton et al. (2007a).

735

GRETTON, BORGWARDT, RASCH, SCH

¨

OLKOPF AND SMOLA

requires symmetrization by means of a ghost sample, that is, a second set of observations drawn

from the same distribution. While not the focus of the present paper, such bounds can be used to

perform inference based on moment matching (Altun and Smola, 2006; Dud

´

ık and Schapire, 2006;

Dud

´

ık et al., 2004).

4.2 Bound on the Unbiased Statistic and Test

The previous bounds are of interest since the proof strategy can be used for general function classes

with well behaved Rademacher averages (see Sriperumbudur et al., 2010a). When F is the unit ball

in an RKHS, however, we may very easily define a test via a convergence bound on the unbiased

statistic MMD

2

u

in Lemma 4. We base our test on the following theorem, which is a straightforward

application of the large deviation bound on U-statistics of Hoeffding (1963, p. 25).

Theorem 10 Assume 0 ≤ k(x

i

,x

j

) ≤ K, from which it follows −2K ≤ h(z

i

,z

j

) ≤ 2K. Then

Pr

X,Y

MMD

2

u

(F,X,Y) −MMD

2

(F, p, q) > t

≤ exp

−t

2

m

2

8K

2

where m

2

:= ⌊m/2⌋ (the same bound applies for deviations of −t and below).

A consistent statistical test for p = q using MMD

2

u

is then obtained.

Corollary 11 A hypothesis test of level α for the null hypothesis p = q has the acceptance region

MMD

2

u

< (4K/

√

m)

p

log(α

−1

).

This test is distribution-free. We now compare the thresholds of the above test with that in Corollary

9. We note first that the threshold for the biased statistic applies to an estimate of MMD, whereas

that for the unbiased statistic is for an estimate of MMD

2

. Squaring the former threshold to make

the two quantities comparable, the squared threshold in Corollary 9 decreases as m

−1

, whereas the

threshold in Corollary 11 decreases as m

−1/2

. Thus for sufficiently large

11

m, the McDiarmid-based

threshold will be lower (and the associated test statistic is in any case biased upwards), and its Type

II error will be better for a given Type I bound. This is confirmed in our Section 8 experiments.

Note, however, that the rate of convergence of the squared, biased MMD estimate to its population

value remains at 1/

√

m (bearing in mind we take the square of a biased estimate, where the bias

term decays as 1/

√

m).

Finally, we note that the bounds we obtained in this section and the last are rather conservative

for a number of reasons: first, they do not take the actual distributions into account. In fact, they are

finite sample size, distribution-free bounds that hold even in the worst case scenario. The bounds

could be tightened using localization, moments of the distribution, etc.: see, for example, Bousquet

et al. (2005) and de la Pe

˜

na and Gin

´

e (1999). Any such improvements could be plugged straight

into Theorem 19. Second, in computing bounds rather than trying to characterize the distribution of

MMD(F,X,Y) explicitly, we force our test to be conservative by design. In the following we aim for

an exact characterization of the asymptotic distribution of MMD(F, X,Y) instead of a bound. While

this will not satisfy the uniform convergence requirements, it leads to superior tests in practice.

11. In the case of α = 0.05, this is m ≥ 12.

736

A KERNEL TWO-SAMPLE TEST

5. Test Based on the Asymptotic Distribution of the Unbiased Statistic

We propose a third test, which is based on the asymptotic distribution of the unbiased estimate of

MMD

2

in Lemma 6. This test uses the asymptotic distribution of MMD

2

u

under H

0

, which follows

from results of Anderson et al. (1994, Appendix) and Serfling (1980, Section 5.5.2): see Appendix

B.1 for the proof.

Theorem 12 Let

˜

k(x

i

,x

j

) be the kernel between feature space mappings from which the mean em-

bedding of p has been subtracted,

˜

k(x

i

,x

j

) :=

φ(x

i

) −µ

p

,φ(x

j

) −µ

p

H

= k(x

i

,x

j

) −E

x

k(x

i

,x) −E

x

k(x, x

j

) + E

x,x

′

k(x, x

′

), (9)

where x

′

is an independent copy of x drawn from p. Assume

˜

k ∈ L

2

(X ×X, p×p) (i.e., the centred

kernel is square integrable, which is true for all p when the kernel is bounded), and that for t =

m+n, lim

m,n→∞

m/t →ρ

x

and lim

m,n→∞

n/t →ρ

y

:= (1−ρ

x

) for fixed 0 < ρ

x

< 1. Then under H

0

,

MMD

2

u

converges in distribution according to

tMMD

2

u

[F,X,Y] →

D

∞

∑

l=1

λ

l

h

(ρ

−1/2

x

a

l

−ρ

−1/2

y

b

l

)

2

−(ρ

x

ρ

y

)

−1

i

, (10)

where a

l

∼ N(0,1) and b

l

∼ N(0,1) are infinite sequences of independent Gaussian random vari-

ables, and the λ

i

are eigenvalues of

Z

X

˜

k(x, x

′

)ψ

i

(x)dp(x) = λ

i

ψ

i

(x

′

).

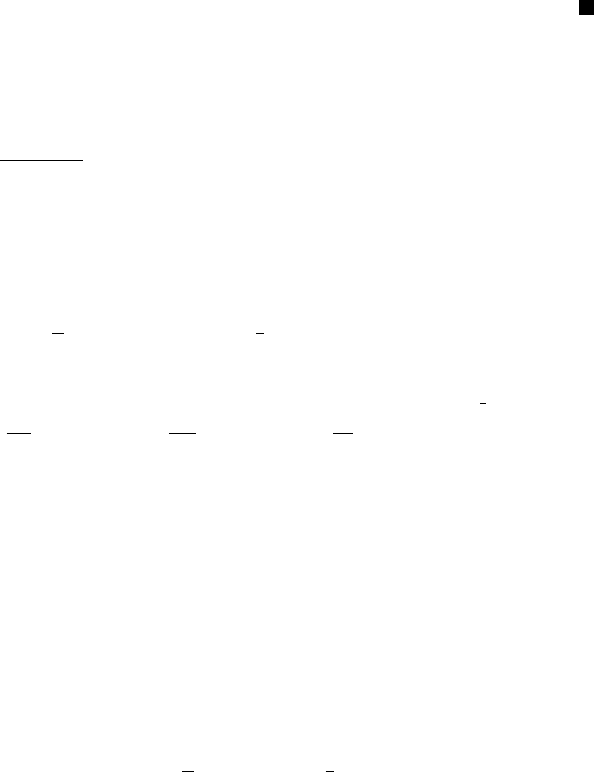

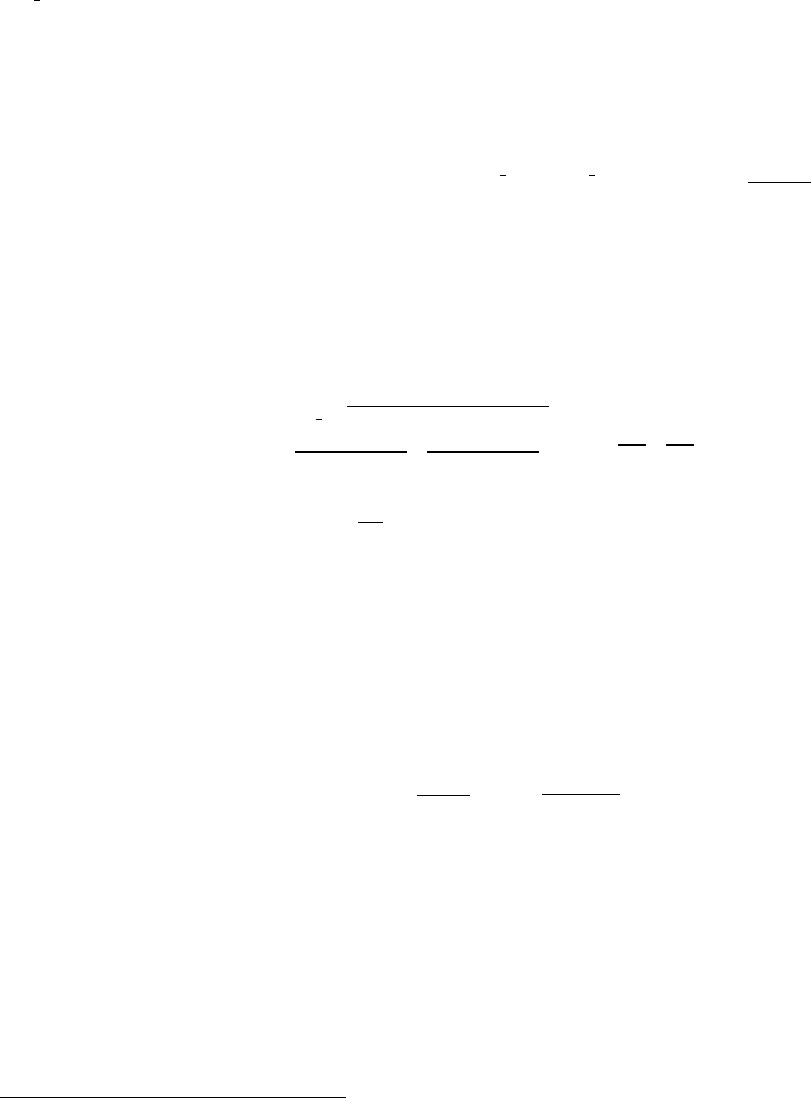

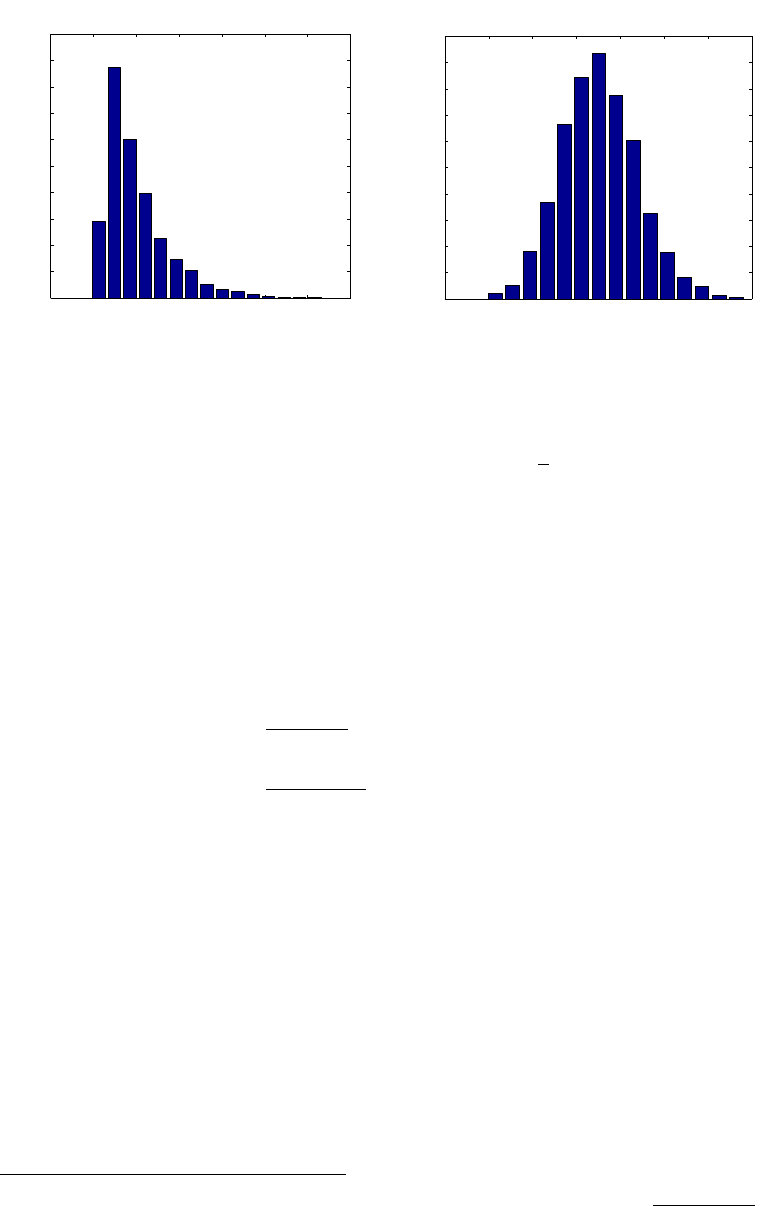

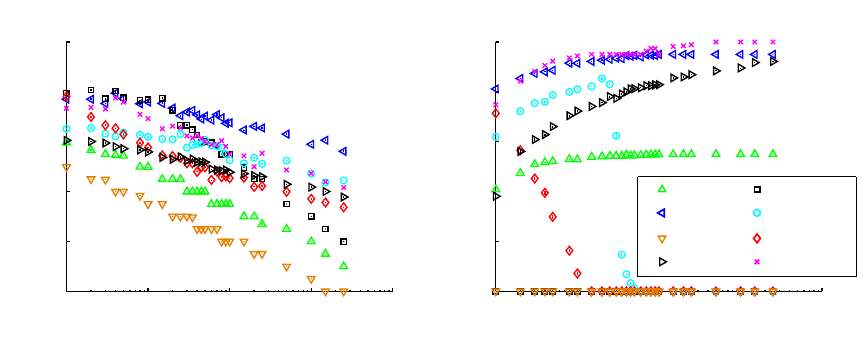

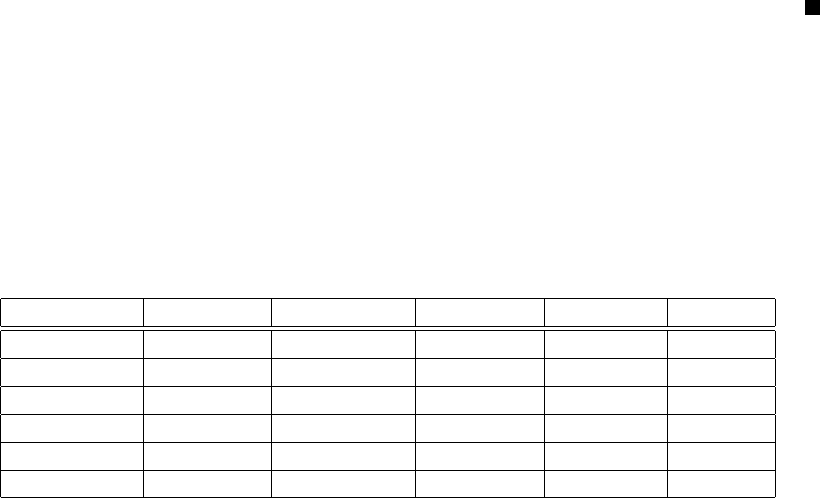

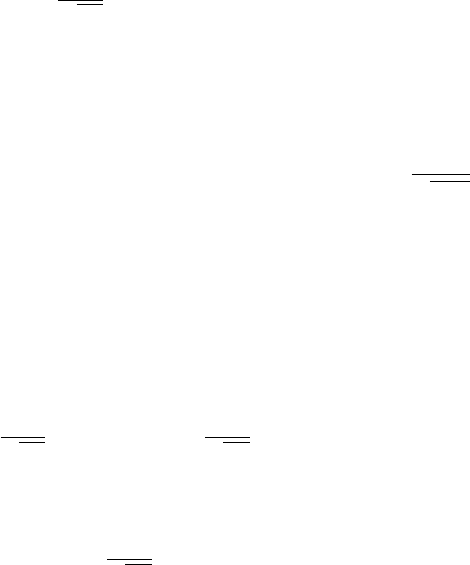

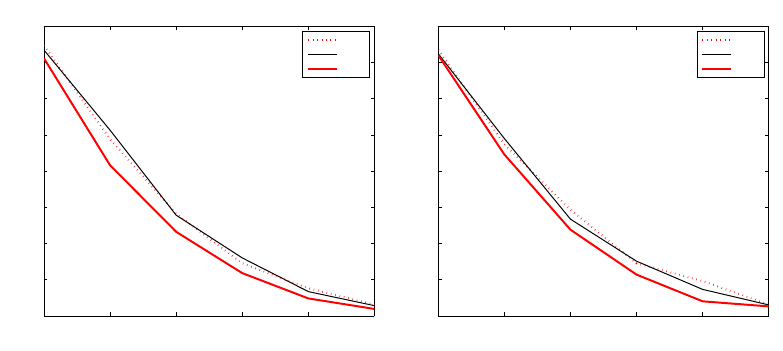

We illustrate the MMD density under both the null and alternative hypotheses by approximating it

empirically for p = q and p 6= q. Results are plotted in Figure 2.

Our goal is to determine whether the empirical test statistic MMD

2

u

is so large as to be outside

the 1−α quantile of the null distribution in (10), which gives a level α test. Consistency of this test

against local departures from the null hypothesis is provided by the following theorem, proved in

Appendix B.2.

Theorem 13 Define ρ

x

, ρ

y

, and t as in Theorem 12, and write µ

q

= µ

p

+g

t

, where g

t

∈H is chosen

such that µ

p

+g

t

remains a valid mean embedding, and

k

g

t

k

H

is made to approach zero as t →∞ to

describe local departures from the null hypothesis. Then

k

g

t

k

H

= ct

−1/2

is the minimum distance

between µ

p

and µ

q

distinguishable by the test.

An example of a local departure from the null hypothesis is described earlier in the discussion of

the L

2

distance between Parzen window estimates (Section 3.3.1). The class of local alternatives

considered in Theorem 13 is more general, however: for instance, Sriperumbudur et al. (2010b,

Section 4) and Harchaoui et al. (2008, Section 5, long version) give examples of classes of pertur-

bations g

t

with decreasing RKHS norm. These perturbations have the property that p differs from q

at increasing frequencies, rather than simply with decreasing amplitude.

One way to estimate the 1 −α quantile of the null distribution is using the bootstrap on the

aggregated data, following Arcones and Gin

´

e (1992). Alternatively, we may approximate the null

737

GRETTON, BORGWARDT, RASCH, SCH

¨

OLKOPF AND SMOLA

−0.04 −0.02 0 0.02 0.04 0.06 0.08 0.1

0

5

10

15

20

25

30

35

40

45

50

Empirical MMD

2

u

density under H0

MMD

2

u

Prob. density

0.05 0.1 0.15 0.2 0.25 0.3 0.35 0.4

0

1

2

3

4

5

6

7

8

9

10

Empirical MMD

2

u

density under H1

MMD

2

u

Prob. density

Figure 2: Left: Empirical distribution of the MMD under H

0

, with p and q both Gaussians with

unit standard deviation, using 50 samples from each. Right: Empirical distribution of

the MMD under H

A

, with p a Laplace distribution with unit standard deviation, and q

a Laplace distribution with standard deviation 3

√

2, using 100 samples from each. In

both cases, the histograms were obtained by computing 2000 independent instances of

the MMD.

distribution by fitting Pearson curves to its first four moments (Johnson et al., 1994, Section 18.8).

Taking advantage of the degeneracy of the U-statistic, we obtain for m = n

E

MMD

2

u

2

=

2

m(m−1)

E

z,z

′

h

2

(z,z

′

)

and

E

MMD

2

u

3

=

8(m−2)

m

2

(m−1)

2

E

z,z

′

h(z,z

′

)E

z

′′

h(z,z

′′

)h(z

′

,z

′′

)

+ O(m

−4

) (11)

(see Appendix B.3), where h(z,z

′

) is defined in Lemma 6, z = (x,y) ∼ p×q where x and y are inde-

pendent, and z

′

,z

′′

are independent copies of z. The fourth moment E

MMD

2

u

4

is not computed,

since it is both very small, O(m

−4

), and expensive to calculate, O(m

4

). Instead, we replace the kur-

tosis

12

with a lower bound due to Wilkins (1944), kurt

MMD

2

u

≥

skew

MMD

2

u

2

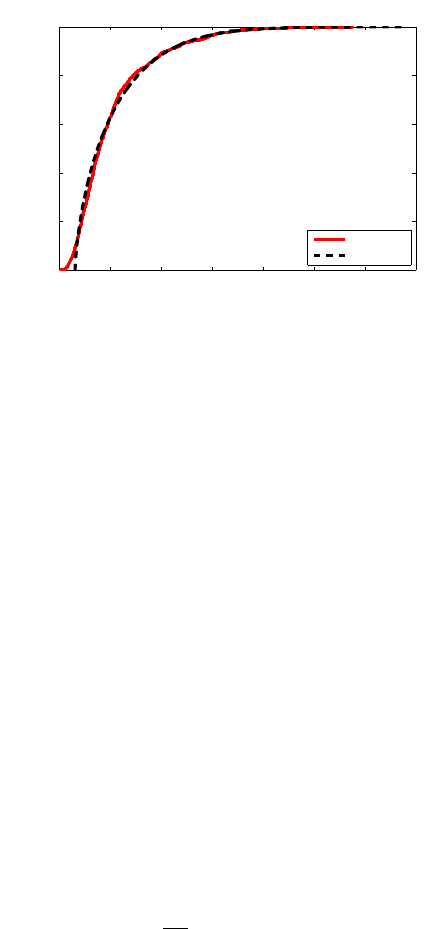

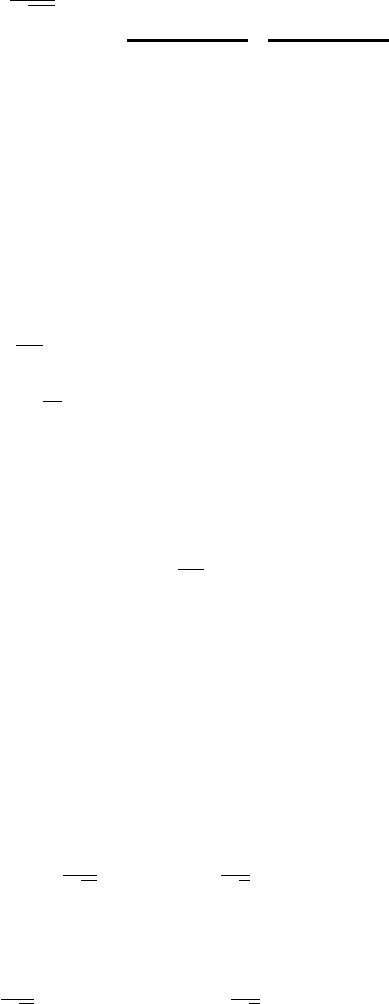

+1. In Figure

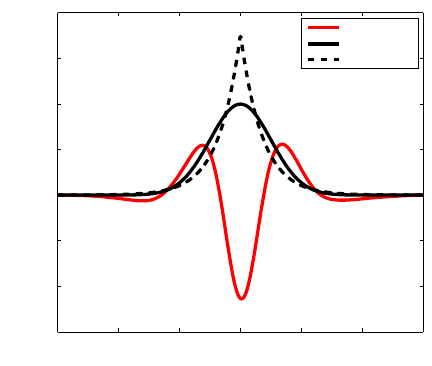

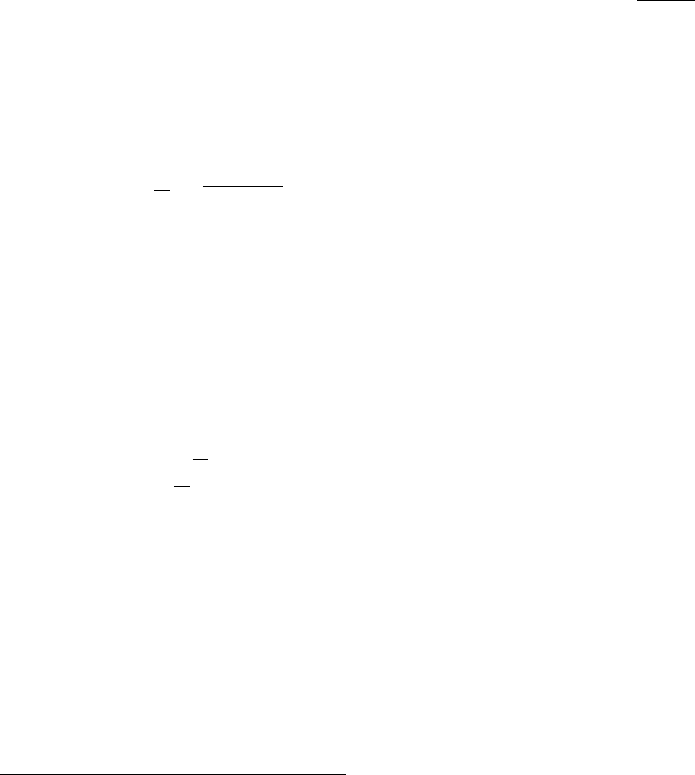

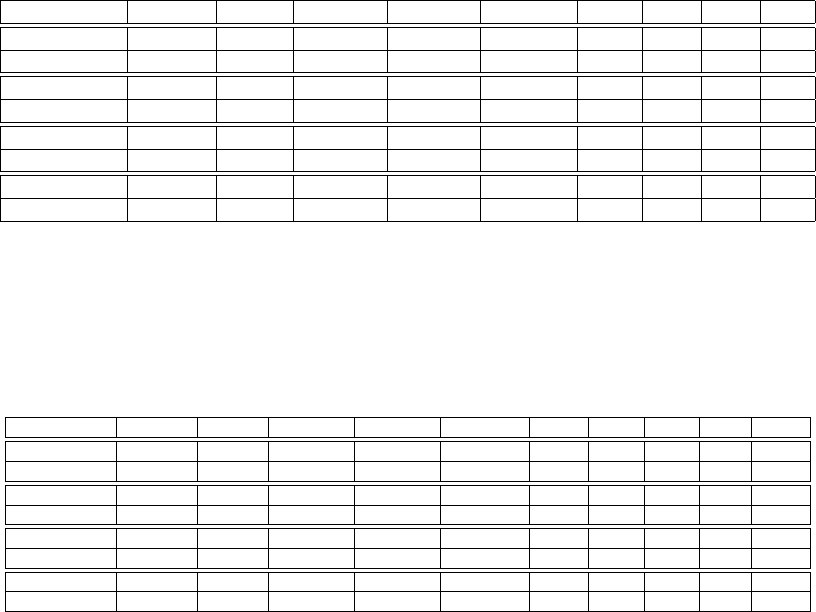

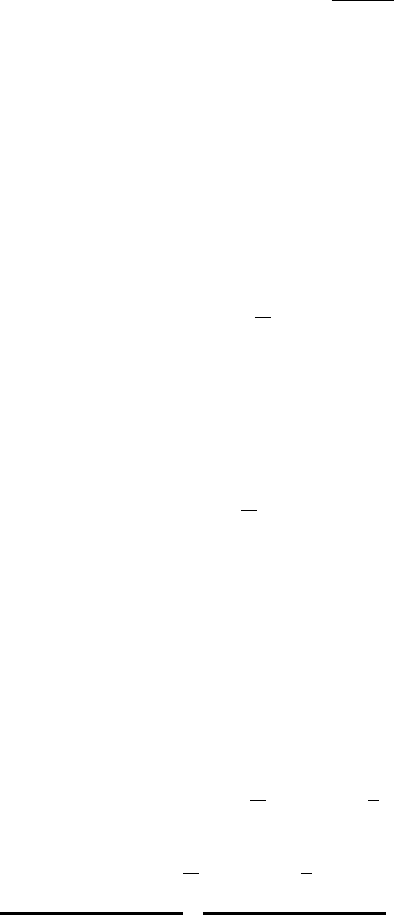

3, we illustrate the Pearson curve fit to the null distribution: the fit is good in the upper quantiles of

the distribution, where the test threshold is computed. Finally, we note that two alternative empiri-

cal estimates of the null distribution have more recently been proposed by Gretton et al. (2009): a

consistent estimate, based on an empirical computation of the eigenvalues λ

l

in (10); and an alter-

native Gamma approximation to the null distribution, which has a smaller computational cost but is

generally less accurate. Further detail and experimental comparisons are given by Gretton et al.

12. The kurtosis is defined in terms of the fourth and second moments as kurt

MMD

2

u

=

E

[

MMD

2

u

]

4

h

E

[

MMD

2

u

]

2

i

2

−3.

738

A KERNEL TWO-SAMPLE TEST

−0.02 0 0.02 0.04 0.06 0.08 0.1 0.12

0

0.2

0.4

0.6

0.8

1

CDF of the MMD and Pearson fit

t

P(MMD

2

u

< t)

Emp. CDF

Pearson

Figure 3: Illustration of the empirical CDF of the MMD and a Pearson curve fit. Both p and q were

Gaussian with zero mean and unit variance, and 50 samples were drawn from each. The

empirical CDF was computed on the basis of 1000 randomly generated MMD values. To

ensure the quality of fit was determined only by the accuracy of the Pearson approxima-

tion, the moments used for the Pearson curves were also computed on the basis of these

1000 samples. The MMD used a Gaussian kernel with σ = 0.5.

6. A Linear Time Statistic and Test

The MMD-based tests are already more efficient than the O(m

2

logm) and O(m

3

) tests described in

Section 3.3.3 (assuming m = n for conciseness). It is still desirable, however, to obtain O(m) tests

which do not sacrifice too much statistical power. Moreover, we would like to obtain tests which

have O(1) storage requirements for computing the test statistic, in order to apply the test to data

streams. We now describe how to achieve this by computing the test statistic using a subsampling

of the terms in the sum. The empirical estimate in this case is obtained by drawing pairs from X and

Y respectively without replacement.

Lemma 14 Define m

2

:= ⌊m/2⌋, assume m = n, and define h(z

1

,z

2

) as in Lemma 6. The estimator

MMD

2

l

[F,X,Y] :=

1

m

2

m

2

∑

i=1

h((x

2i−1

,y

2i−1

),(x

2i

,y

2i

))

can be computed in linear time, and is an unbiased estimate of MMD

2

[F, p, q].

While it is expected that MMD

2

l

has higher variance than MMD

2

u

(as we will see explicitly later), it

is computationally much more appealing. In particular, the statistic can be used in stream computa-

tions with need for only O(1) memory, whereas MMD

2

u

requires O(m) storage and O(m

2

) time to

compute the kernel h on all interacting pairs.

Since MMD

2

l

is just the average over a set of random variables, Hoeffding’s bound and the cen-

tral limit theorem readily allow us to provide both uniform convergence and asymptotic statements

with little effort. The first follows directly from Hoeffding (1963, Theorem 2).

739

GRETTON, BORGWARDT, RASCH, SCH

¨

OLKOPF AND SMOLA

Theorem 15 Assume 0 ≤ k(x

i

,x

j

) ≤ K. Then

Pr

X,Y

MMD

2

l

(F,X,Y) −MMD

2

(F, p, q) > t

≤ exp

−t

2

m

2

8K

2

where m

2

:= ⌊m/2⌋ (the same bound applies for deviations of −t and below).

Note that the bound of Theorem 10 is identical to that of Theorem 15, which shows the former is

rather loose. Next we invoke the central limit theorem (e.g., Serfling, 1980, Section 1.9).

Corollary 16 Assume 0 < E

h

2

< ∞. Then MMD

2

l

converges in distribution to a Gaussian ac-

cording to

m

1

2

MMD

2

l

−MMD

2

[F, p, q]

D

→ N

0,σ

2

l

,

where σ

2

l

= 2

h

E

z,z

′

h

2

(z,z

′

) −[E

z,z

′

h(z,z

′

)]

2

i

, where we use the shorthand E

z,z

′

:= E

z,z

′

∼p×q

.

The factor of 2 arises since we are averaging over only ⌊m/2⌋ observations. It is instructive to

compare this asymptotic distribution with that of the quadratic time statistic MMD

2

u

under H

A

,

when m = n. In this case, MMD

2

u

converges in distribution to a Gaussian according to

m

1

2

MMD

2

u

−MMD

2

[F, p, q]

D

→ N

0,σ

2

u

,

where σ

2

u

= 4

E

z

(E

z

′

h(z,z

′

))

2

−[E

z,z

′

(h(z,z

′

))]

2

(Serfling, 1980, Section 5.5). Thus for MMD

2

u

,

the asymptotic variance is (up to scaling) the variance of E

z

′

[h(z,z

′

)], whereas for MMD

2

l

it is

Var

z,z

′

[h(z,z

′

)].

We end by noting another potential approach to reducing the cost of computing an empirical

MMD estimate, by using a low rank approximation to the Gram matrix (Fine and Scheinberg, 2001;

Williams and Seeger, 2001; Smola and Sch

¨

olkopf, 2000). An incremental computation of the MMD

based on such a low rank approximation would require O(md) storage and O(md) computation

(where d is the rank of the approximate Gram matrix which is used to factorize both matrices)

rather than O(m) storage and O(m

2

) operations. That said, it remains to be determined what effect

this approximation would have on the distribution of the test statistic under H

0

, and hence on the

test threshold.

7. Related Metrics and Learning Problems

The present section discusses a number of topics related to the maximum mean discrepancy, includ-

ing metrics on probability distributions using non-RKHS function classes (Sections 7.1 and 7.2), the

relation with set kernels and kernels on probability measures (Section 7.3), an extension to kernel

measures of independence (Section 7.4), a two-sample statistic using a distribution over witness

functions (Section 7.5), and a connection to outlier detection (Section 7.6).

7.1 The MMD in Other Function Classes

The definition of the maximum mean discrepancy is by no means limited to RKHS. In fact, any

function class F that comes with uniform convergence guarantees and is sufficiently rich will enjoy

the above properties. Below, we consider the case where the scaled functions in F are dense inC(X)

(which is useful for instance when the functions in F are norm constrained).

740

A KERNEL TWO-SAMPLE TEST

Definition 17 Let F be a subset of some vector space. The star S[F] of a set F is

S[F] :=

{

αf|f ∈ F and α ∈[0,∞)

}

Theorem 18 Denote by F the subset of some vector space of functions from X to R for which

S[F] ∩C(X) is dense in C(X) with respect to the L

∞

(X) norm. Then MMD[F, p, q] = 0 if and only

if p = q, and MMD[F, p, q] is a metric on the space of probability distributions. Whenever the star

of F is not dense, the MMD defines a pseudo-metric space.

Proof It is clear that p = q implies MMD[F, p,q] = 0. The proof of the converse is very similar

to that of Theorem 5. Define H := S(F) ∩C(X). Since by assumption H is dense in C(X), there

exists an h

∗

∈ H satisfying

k

h

∗

− f

k

∞

< ε for all f ∈C(X). Write h

∗

:= α

∗

g

∗

, where g

∗

∈ F. By

assumption, E

x

g

∗

−E

y

g

∗

= 0. Thus we have the bound

|

E

x

f(x) −E

y

( f(y))

|

≤

|

E

x

f(x) −E

x

h

∗

(x)

|

+ α

∗

|

E

x

g

∗

(x) −E

y

g

∗

(y)

|

+

|

E

y

h

∗

(y) −E

y

f(y)

|

≤ 2ε

for all f ∈C(X) and ε > 0, which implies p = q by Lemma 1.

To show MMD[F, p, q] is a metric, it remains to prove the triangle inequality. We have

sup

f∈F

E

p

f −E

q

f

+ sup

g∈F

E

q

g−E

r

g

≥ sup

f∈F

E

p

f −E

q

f

+

E

q

f −E

r

≥ sup

f∈F

|

E

p

f −E

r

f

|

.

Note that any uniform convergence statements in terms of F allow us immediately to characterize

an estimator of MMD(F, p,q) explicitly. The following result shows how (this reasoning is also the

basis for the proofs in Section 4, although here we do not restrict ourselves to an RKHS).

Theorem 19 Let δ ∈(0, 1) be a confidence level and assume that for some ε(δ,m, F) the following

holds for samples

{

x

1

,.. .,x

m

}

drawn from p:

Pr

X

(

sup

f∈F

E

x

[ f] −

1

m

m

∑

i=1

f(x

i

)

> ε(δ,m,F)

)

≤ δ.

In this case we have that,

Pr

X,Y

{|

MMD[F, p, q] −MMD

b

[F,X,Y]

|

> 2ε(δ/2,m,F)

}

≤ δ,

where MMD

b

[F,X,Y] is taken from Definition 2.

Proof The proof works simply by using convexity and suprema as follows:

|

MMD[F, p, q] −MMD

b

[F,X,Y]

|

=

sup

f∈F

|

E

x

[ f] −E

y

[ f]

|

−sup

f∈F

1

m

m

∑

i=1

f(x

i

) −

1

n

n

∑

i=1

f(y

i

)

≤sup

f∈F

E

x

[ f] −E

y

[ f] −

1

m

m

∑

i=1

f(x

i

) +

1

n

n

∑

i=1

f(y

i

)

≤sup

f∈F

E

x

[ f] −

1

m

m

∑

i=1

f(x

i

)

+ sup

f∈F

E

y

[ f] −

1

n

n

∑

i=1

f(y

i

)

.

741

GRETTON, BORGWARDT, RASCH, SCH

¨

OLKOPF AND SMOLA

Bounding each of the two terms via a uniform convergence bound proves the claim.

This shows that MMD

b

[F,X,Y] can be used to estimate MMD[F, p, q], and that the quantity is

asymptotically unbiased.

Remark 20 (Reduction to Binary Classification) As noted by Friedman (2003), any classifier

which maps a set of observations

{

z

i

,l

i

}

with z

i

∈ X on some domain X and labels l

i

∈

{

±1

}

, for

which uniform convergence bounds exist on the convergence of the empirical loss to the expected

loss, can be used to obtain a similarity measure on distributions—simply assign l

i

= 1 if z

i

∈ X and

l

i

= −1 for z

i

∈Y and find a classifier which is able to separate the two sets. In this case maxi-

mization of E

x

[ f] −E

y

[ f] is achieved by ensuring that as many z ∼ p(z) as possible correspond to

f(z) = 1, whereas for as many z ∼ q(z) as possible we have f(z) = −1. Consequently neural net-

works, decision trees, boosted classifiers and other objects for which uniform convergence bounds

can be obtained can be used for the purpose of distribution comparison. Metrics and divergences

on distributions can also be defined explicitly starting from classifiers. For instance, Sriperumbudur

et al. (2009, Section 2) show the MMD minimizes the expected risk of a classifier with linear loss

on the samples X and Y, and Ben-David et al. (2007, Section 4) use the error of a hyperplane clas-

sifier to approximate the A-distance between distributions (Kifer et al., 2004). Reid and Williamson

(2011) provide further discussion and examples.

7.2 Examples of Non-RKHS Function Classes

Other function spaces F inspired by the statistics literature can also be considered in defining the

MMD. Indeed, Lemma 1 defines an MMD with F the space of bounded continuous real-valued

functions, which is a Banach space with the supremum norm (Dudley, 2002, p. 158). We now

describe two further metrics on the space of probability distributions, namely the Kolmogorov-

Smirnov and Earth Mover’s distances, and their associated function classes.

7.2.1 KOLMOGOROV-SMIRNOV STATISTIC

The Kolmogorov-Smirnov (K-S) test is probably one of the most famous two-sample tests in statis-

tics. It works for random variables x ∈ R (or any other set for which we can establish a total order).

Denote by F

p

(x) the cumulative distribution function of p and let F

X

(x) be its empirical counterpart,

F

p

(z) := Pr

{

x ≤ z for x ∼ p

}

and F

X

(z) :=

1

|X|

m

∑

i=1

1

z≤x

i

.

It is clear that F

p

captures the properties of p. The Kolmogorov metric is simply the L

∞

distance

k

F

X

−F

Y

k

∞

for two sets of observations X and Y. Smirnov (1939) showed that for p = q the limiting

distribution of the empirical cumulative distribution functions satisfies

lim

m,n→∞

Pr

X,Y

n

mn

m+n

1

2

k

F

X

−F

Y

k

∞

> x

o

= 2

∞

∑

j=1

(−1)

j−1

e

−2j

2

x

2

for x ≥ 0, (12)

which is distribution independent. This allows for an efficient characterization of the distribution

under the null hypothesis H

0

. Efficient numerical approximations to (12) can be found in numerical

analysis handbooks (Press et al., 1994). The distribution under the alternative p 6= q, however, is

unknown.

742

A KERNEL TWO-SAMPLE TEST

The Kolmogorov metric is, in fact, a special instance of MMD[F, p, q] for a certain Banach

space (M

¨

uller, 1997, Theorem 5.2).

Proposition 21 Let F be the class of functions X → R of bounded variation

13

1. Then

MMD[F, p, q] =

F

p

−F

q

∞

.

7.2.2 EARTH-MOVER DISTANCES

Another class of distance measures on distributions that may be written as maximum mean discrep-

ancies are the Earth-Mover distances. We assume (X, ρ) is a separable metric space, and define

P

1

(X) to be the space of probability measures on X for which

R

ρ(x,z)dp(z) < ∞ for all p ∈P

1

(X)

and x ∈ X (these are the probability measures for which E

x

|

x

|

< ∞ when X = R). We then have the

following definition (Dudley, 2002, p. 420).

Definition 22 (Monge-Wasserstein metric) Let p ∈P

1

(X) and q ∈P

1

(X). The Monge-Wasserstein

distance is defined as

W(p,q) := inf

µ∈M(p,q)

Z

ρ(x,y)dµ(x,y),

where M(p, q) is the set of joint distributions on X ×X with marginals p and q.

We may interpret this as the cost (as represented by the metric ρ(x, y)) of transferring mass dis-

tributed according to p to a distribution in accordance with q, where µ is the movement schedule.

In general, a large variety of costs of moving mass from x to y can be used, such as psycho-optical

similarity measures in image retrieval (Rubner et al., 2000). The following theorem provides the

link with the MMD (Dudley, 2002, Theorem 11.8.2).

Theorem 23 (Kantorovich-Rubinstein) Let p ∈ P

1

(X) and q ∈ P

1

(X), where X is separable.

Then a metric on P

1

(S) is defined as

W(p,q) =

k

p−q

k

∗

L

= sup

k

f

k

L

≤1

Z

f d(p−q)

,

where

k

f

k

L

:= sup

x6=y∈X

|

f(x) − f(y)

|

ρ(x,y)

is the Lipschitz seminorm

14

for real valued f on X.

A simple example of this theorem is as follows (Dudley, 2002, Exercise 1, p. 425).

Example 2 Let X = R with associated ρ(x, y) =

|

x−y

|

. Then given f such that

k

f

k

L

≤ 1, we use

integration by parts to obtain

Z

f d(p−q)

=

Z

(F

p

−F

q

)(x) f

′

(x)dx

≤

Z

(F

p

−F

q

)

(x)dx,

13. A function f defined on [a, b] is of bounded variation C if the total variation is bounded by C, that is, the supremum

over all sums

∑

1≤i≤n

|f (x

i

) − f(x

i−1

)|,

where a ≤x

0

≤ ... ≤ x

n

≤ b (Dudley, 2002, p. 184).

14. A seminorm satisfies the requirements of a norm besides

k

x

k

= 0 only for x = 0 (Dudley, 2002, p. 156).

743

GRETTON, BORGWARDT, RASCH, SCH

¨

OLKOPF AND SMOLA

where the maximum is attained for the function g with derivative g

′

= 21

F

p

>F

q

−1 (and for which

k

g

k

L

= 1). We recover the L

1

distance between distribution functions,

W(P,Q) =

Z

(F

p

−F

q

)

(x)dx.

One may further generalize Theorem 23 to the set of all laws P(X) on arbitrary metric spaces X

(Dudley, 2002, Proposition 11.3.2).

Definition 24 (Bounded Lipschitz metric) Let p and q be laws on a metric space X. Then

β(p,q) := sup

k

f

k